What makes one question hard and another one easy?

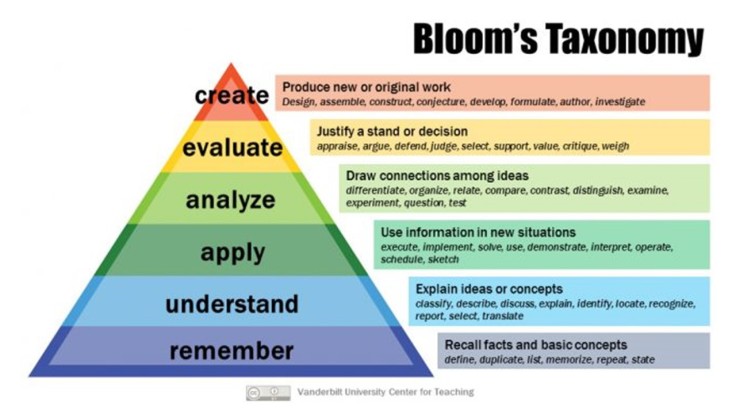

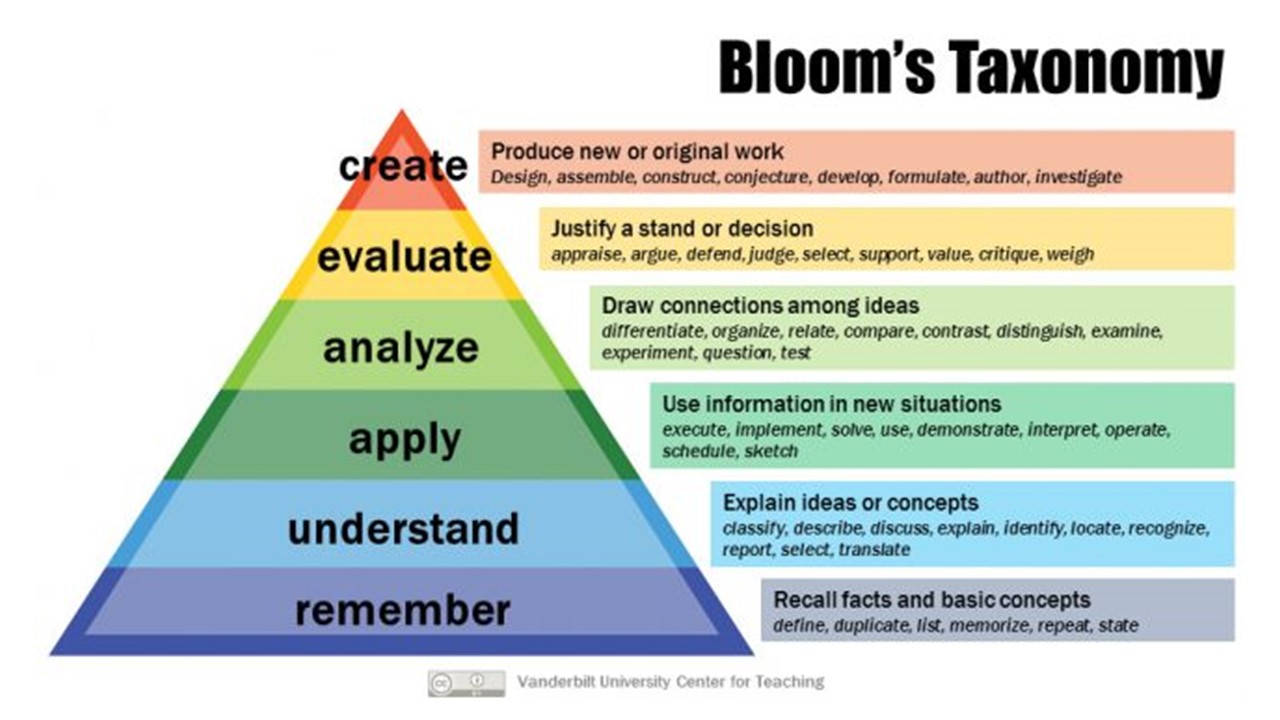

When I trained, Bloom’s taxonomy was everywhere. Personally, I haven’t really paid it much attention in quite a while, but I was reminded recently that it is still used extensively in a wide range of teaching and learning contexts. Issues with Bloom’s have received fairly thorough public airings, with both Doug Lemov and David Didau outlining some problems and presenting their own taxonomies, complete with snazzy graphics (1).

These critiques focus on issues of knowledge and transferability – namely disputing proponents who argue that spending time “analysing” in a lesson improves students’ general skills of analysis (please also make sure to see (3) below).

Those critiques are important and, to my mind, absolutely in line with the evidence from cognitive science. Here, I want to focus on a different aspect of Bloom’s: the way that it can be used to increase challenge in a lesson. The idea here is to associate the different levels of Bloom’s with different command words, and that the different command words can be used to frame questions and alter their level of difficulty or challenge.

Before I get accused of making stuff up, I’d really recommend you click through to this simple Google search. There are tons of websites, blogs and academic articles all revolving around the simple idea: using command words from higher up the pyramid provokes deeper thought, higher order thinking and cognitive challenge.

I think it’s important to think about this as a model that we can test. There isn’t really a point in discussing it if we can’t agree on some kind of baseline prediction; i.e. if X is true then we would expect to see Y as well. In our case, the hypothesis could be:

Use of these command words or levels in Bloom’s taxonomy predicts the level of challenge in a question, with higher order levels/command words predicting increased challenge

So if the Bloom’s model for challenge were true, we would expect that use of the command words predicts or even causes increased challenge. Is this the case?

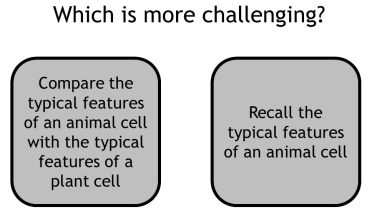

I’m afraid most of the examples I’m going to use are science-based, but I think the exploration around them should be pretty straightforward for a non-specialist to follow, and I’ve accompanied each example with a short caption elaborating a bit. What I’d like to do is compare different questions and see which we think is, well, more challenging. Let’s start with these two questions:

At each point, the question on the left will be question 1, and the question on the right question 2. Between these two, it should be pretty obvious that question 1 (left) is harder than question 2 (right). But, and here’s the but, is that because question 1 uses the word “compare,” or is it because it’s just asking for more things? What if I rephrased it like this:

If you think about the response that you would expect from a student for each question I don’t think there’s a huge difference. Perhaps Q1 would have the word “but” or a “both…only” like I have in my caption, but does that actually make it harder? If a student writes it as a list, does that prove they know it less, or are thinking about it less hard? I don’t think so.

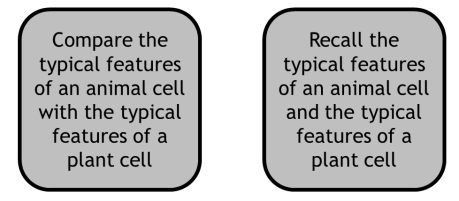

Let’s try another one:

Here I’ve made it even more explicit. The answer that you would get from a student for both questions would be exactly the same. To be sure, you could argue that Q2 is really a “compare” in disguise, but that would sort of prove my point and call the whole command word orthodoxy into question. So we have an issue:

The command words can be superficial

That doesn’t mean for this purpose they are useless. If you are struggling to think about different ways to test or practice the same material, a command word can help you think about things from a different angle. That’s all well and good, but it fails the hypothesis: the command word doesn’t necessarily predict or determine challenge.

Let’s look at some more challenge pairs (2):

Here, I’ve controlled the command word, going with a describe for both. According to our hypothesis, they should be at a comparable level of challenge. Of course though, a really important variable isn’t accounted for: the intrinsic difficulty of the content. Between these two questions, I would argue that Q1 is more challenging, not because of the command word or other variables discussed below, but because the menstrual cycle is just harder to understand. I’m not going to go into the essential characteristics that make one thing harder to grasp than another, but thinking about abstractness, causality, conflict with prior conceptions: all of these elements contribute to the challenge of a question and, crucially, none of them involve a command word.

We now have two considerations:

The command words can be superficial

The Bloom’s model doesn’t account for intrinsic difficulty – content demand

Let’s do another challenge pair. In this one, we are dealing with a practical method for preparation of a certain substance (solid copper sulphate). But much like you could bake a lemon tart using a shop-bought base or make your own, in this example the two questions only differ in where the method starts, with Q1 starting earlier in the method (make your own tart base) and Q2 starting later (buy a tart base):

So which one is more challenging? Well, the command words are basically the same, the content is no more intrinsically challenging between them and yet Q1 is more challenging. Again, not because the content is any different (like above) or the command word is significantly different, but because one simply involves more steps than the other. We haven’t accounted for the number of things in the question. Thinking back to our cells example, if I ask students “list 5 typical animal cell organelles” as opposed to “list 3 typical animal cell organelles” the challenge is moderated not by content or command, but by quantity – the number of things I want students to do in one go:

The command words can be superficial

The Bloom’s model doesn’t account for intrinsic difficulty – content demand

Challenge can be regulated by the number of things the student needs to do – task quantity

Let’s do two more examples. Here’s the first:

At first glance, neither of these is more challenging than the other: they are identical. But, and it’s an important but, what if I teach my students how to draw the particle diagram of a solid on Monday, and then ask Q1 at the end of that lesson. Then, next week I ask Q2 on our Monday lesson, seven days later. Which question is more challenging? Obviously Q2. If students haven’t seen something in a week, testing them on it is more challenging than if they saw it five minutes ago. If a student knows the stuff better, then the same question on the same stuff will be less challenging (and vice versa). Challenge is therefore also determined by the things a student knows:

The command words can be superficial

The Bloom’s model doesn’t account for intrinsic difficulty – content demand

Challenge can be regulated by the number of things the student needs to do – task quantity

The timing is crucial: what is easy for a child today is hard next week because they forget it – internal resources

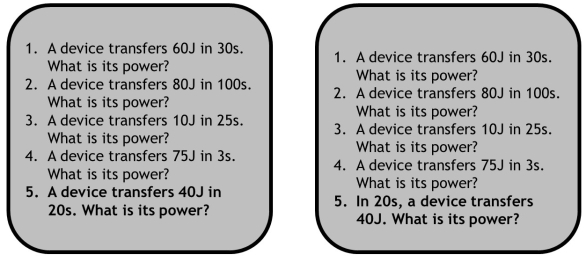

One more example, I promise. We’re almost done. Which of the below is more challenging?

Again, command word is the same, content is the same, number of things to do is the same and let’s assume that we ask them on the same day. Looking at those again, I think most would agree that there is no difference in challenge. They both involve recalling the correct formula, substituting the values, resolving to find the answer and then using the unit. More or less identical – all that’s changed is the syntax. Ok, so those two are the same. What about these two:

Here, I’ve highlighted the questions we asked above in bold, I’ve just added four questions beforehand. The “trick” to this is that Q5(left) has the same syntax as Q1-4 and Q5(right) has a different one. I reckon most science teachers would say that their students are more likely then to get Q5(right) wrong. I think there are a few reasons for this, but one is about support: giving students similar problems to ones already solved is a form of support for them. As I’ve argued before, over time you need to gradually pull away support, and the box on the right does that. Instead of giving students the calm familiarity of problems already solved and strategies already implemented, it pulls that cushion away a little bit, and forces further thought. The same would apply to any other kind of support: a model answer, a worked example, a step-by-step – whatever. If you alter the supports you are providing for students you also alter the challenge:

The command words can be superficial

The Bloom’s model doesn’t account for intrinsic difficulty – content demand

Challenge can be regulated by the number of things the student needs to do – task quantity

The timing is crucial: what is easy for a child today is hard next week because they forget it – internal resources

Even when the variables above are controlled, challenged can be altered by proving students with external supports

Any regular readers of this blog can guess the next step – when I turn this into an equation:

If the top bit is high, challenge is high. If the bottom bit is low, challenge is high.

Vice versa: top bit low: challenge low, bottom bit high: challenge low.

Particularly keen readers will spot the similarity between this equation and the one I adapted from Frederick Reif in order to simplify cognitive load theory. But I think that’s sort of the point: I’m looking for a model which helps me make predictions in the classroom about the type of work I should be setting or the type of question I should be asking. Bloom’s doesn’t really get me all that far, but this model does. And I didn’t set out to find something that conveniently looked exactly like a model I have already written about. I did the question pairs the same as you and thought hard about what it was that makes things challenging or not. I ended up back on familiar ground: a simplified model of cognitive load theory. To me, that just strengthens the model and helps me know what I’m supposed to do in the classroom.

No doubt, someone is going to tell me that I’m just not doing Bloom’s right (3). That’s ok. But if you’re right, then Bloom’s has to be better at making predictions about challenge than my model, and I really don’t think it is.

(1) I don’t have anything quite so daring as a Bloom’s delivery service, but I have written before about how generic approaches to teaching and learning should probably be abandoned in favour of subject specific thought.

(2) As an aside, this is a really great activity to try in a department meeting – discussing what it really is about a particular question in your subject that makes it more challenging than another.

(3) I’m also aware that Bloom probably never meant for people to use his taxonomy in the way that they do. UPDATE: Thanks to Pedro for reminding me that Bloom didn’t even mention any pyramids – read more here.

October 24, 2019 at 7:14 am

I love this.

I love the way it’s written, the content and the conclusion.

LikeLike

October 24, 2019 at 7:23 am

Thank you! Feel free to look around while you are here 🙂

LikeLiked by 1 person

October 24, 2019 at 6:20 pm

Great post. Thank you! I would just say that prior knowledge is not always helpful, since sometimes it makes learning more difficult, especially when the student carries relevant misconceptions. So the quality of prior knowledge, not just the quantity, is pretty important. How would you express that in your equation? Maybe you should add misconceptions in the numerator and specify the kind of prior knowledge that contributes to the denominator.

LikeLike

October 24, 2019 at 5:27 pm

Interesting point – I would include misconceptions as part of the content difficulty

LikeLike

November 7, 2019 at 4:06 pm

Great article, as I’ve recently presented on Craig Barton’s ‘same surface, different deep’ questions, I am seeing similarities.

LikeLike

December 2, 2019 at 11:46 am

Great article with great ideas. Thanks!

LikeLike

December 2, 2019 at 3:20 pm

Reblogged this on kadir kozan.

LikeLike

August 31, 2020 at 9:50 pm

Hello

Started following you on Twitter this summer and read some of your blogs. I find the way you write and your thought process are spot on. Thank you

LikeLiked by 1 person

September 1, 2020 at 6:13 pm

happy to hear!

LikeLike

March 2, 2021 at 12:26 pm

As a student chemistry teacher this is Au. I really like this insight.

LikeLiked by 1 person